As computer science has evolved, so too have the ways that programmers interact with computers, all with the basic purpose of instructing them what to do. Today, modern programming languages are closer to human languages than the binary instructions an electronic computer understands. This raises the question of how these programming languages become understandable by computers.

The answer involves compiled and interpreted language implementations, in part, and this article will lay the groundwork for exploring the similarities and differences between these concepts. We'll briefly introduce the topic of computing languages and provide some relatable analogies to help you understand how programming languages speak to computers.

One quick note: programming languages are not themselves compiled or interpreted and can be implemented with either a compiler or an interpreter. But for simplicity's sake, we'll be referring to compiled and interpreted languages throughout this article.

We’ll cover:

- A broad introduction to programming languages

- What is a compiled language?

- What is an interpreted language?

- Compiled vs interpreted: A comparison

- Get started with programming languages

A broad introduction to programming languages

At the most fundamental level, computers understand machine language. The long-winded set of instructions made up of binary data (ones and zeros) tells the physical components, or hardware, of the computer to perform operations and transmit information to different places, and ultimately do the task the programmer wants it to do.

In the early days, programming electronic computers was as much a physical job as it was a mental one. Computers were largely comprised of vacuum tubes that had to be manually plugged, unplugged, replaced, and transferred, all to make the gigantic machines process equations. As labor-intensive as it may have been, the programmers were speaking machine language through their actions, inputting binary data to process information and get results. Later iterations of computer technology allowed programmers to input typed programs in machine language, eliminating the need for the computer-programmer vacuum-tube dances of the early days.

So, machine language became easier to write! However, programmers still had a huge problem: the amount of binary code necessary to tell the machine to perform even a simple command was (and is) frankly overwhelming. Take this example from The Scientist and Engineer’s Guide to Digital Signal Processing, by Steven W. Smith, which shows a machine code program for adding 1,234 and 4,321:

If machine language is this complicated for basic addition functions, imagine how many lines of ones and zeros you’d be staring at if you were trying to tell a CPU to process a complex algorithm. You can likely imagine that programming a modern application at the machine code level would be virtually impossible.

This problem led to a valuable invention: assembly language. Assembly language is a kind of shorthand or mnemonic device (to generalize) that directly correlates to binary code. Almost every computer hardware architecture has its own unique assembly language that translates to patterns of ones and zeros and ultimately tells the computer what to do. Hence the vacuum-tube programmer’s job was replaced by the assembler, a computer program holding a set of instructions determined by hardware architects to translate assembly language to machine language.

Unfortunately for programmers of the time, writing anything but the most basic program in assembly language still proved not only difficult but time-consuming. This problem again led to the establishment of additional languages correlating directly to different aspects of the assembly language (already a mnemonic device). This is where we begin to see the very first iterations of modern programming languages. One of the most widely used examples is the C language, which reads more like human English, as you can see in this code.

#include<stdio.h>

int main() {

printf("Hello World\n");

return 0;

}

These human-readable languages, called high-level languages, might make more sense to a programmer than binary code. But if you tried to give a computer a program in a high-level language like the code above it would do nothing. It's not because the computer has anything against you, but simply because it doesn’t understand. It would be like giving your friend who only speaks English a guide to bake a chocolate cake, except it’s written in Urdu.

For your friend to be able to follow the guide, its instructions would need to be converted to a language your friend understands (English). This can be done by either compiling a translation of the guide and handing it over to your friend or interpreting the instructions for him while he is baking. This is where the distinction is drawn between compiled and interpreted languages. Thankfully, most modern computers come with assemblers built-in, so they won’t need translation down to the machine language (binary code) level. But they still need to be distilled to the assembly code level.

What is a compiled language?

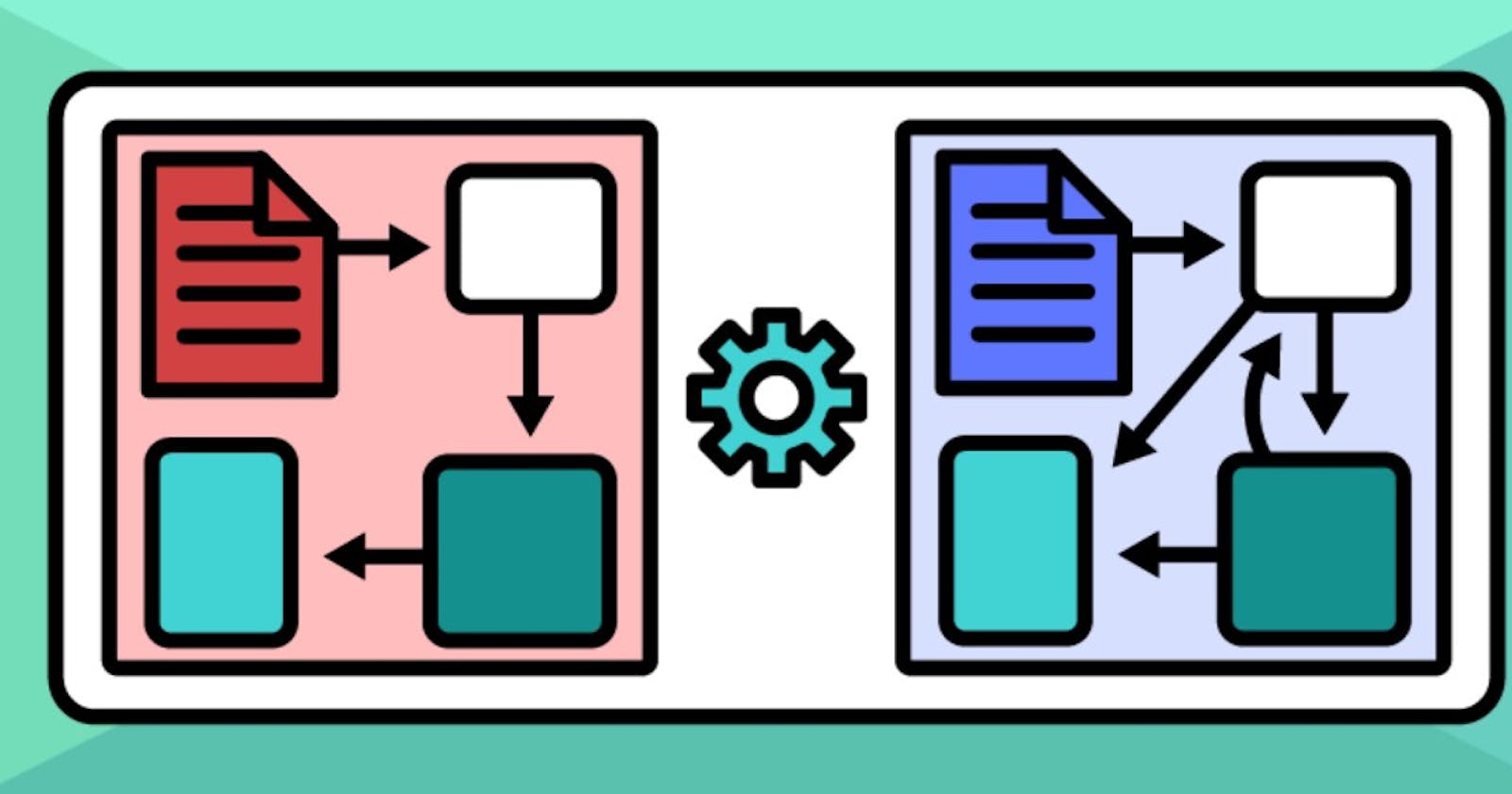

Compiled languages are programming languages that must be translated to machine-readable instructions using compilers, which are programs that convert human-readable source code before any code is executed. An executable file is generated, and the compiled program is then passed to the target machine for execution.

Compiled languages are fast and efficient, as the instructions are precompiled to the native language of the target machine, which then needs no assistance while executing the instructions.

Some examples of compiled languages include:

- C, C++, and C#

- Go

- Rust

- Haskell

- Cobol

What is an interpreted language?

Interpreted languages are programming languages for which instructions are not precompiled for the target machine in a machine-readable form. Rather, these languages are assisted by an interpreter. An interpreter is a program that translates high-level, human-readable source code into low-level, machine-readable target code line by line while the interpreted program is being executed.

Interpreting the language is less efficient because the interpreter must be present for the entire process, but these languages are also highly adaptable.

Some examples of interpreted languages include:

- Python

- JavaScript

- PHP

- MATLAB

- Perl

- Ruby

Compiled vs interpreted: A comparison

As covered in the previous two sections, compiled and interpreted languages essentially perform the same function: to translate information from the programmer-understandable language to the machine-understandable language. That said, each has its advantages and disadvantages.

Compilers

- Translate the entirety of the source code to machine code before execution

- Tend to have faster execution speeds compared to interpreted languages

- Require additional time before testing to complete the entire compilation step

- Generate binary code that is platform-dependent

- Are prevented from finishing when compilation errors occur

Interpreters

- Translate each command in source code to machine code, and then execute it before moving on to the next command

- Tend to have slower execution speeds than compiled languages because they translate the source code at run time

- Are often more flexible, with features like dynamic typing and smaller program size

- Generate binary code that is platform-independent because interpreters execute the program source code themselves

- Debug the source code at run time

Compiled languages are highly efficient in terms of processing requirements because they don’t require the extra power consumed by an interpreter. As a result, they can reliably run very quickly with minimal interruptions and use less of the computer’s resources in the process. However, when a programmer wants to update software written in a compiled language, the entire program needs to be edited, re-compiled, and re-launched.

Let's return to the baking analogy. Imagine a translator had just compiled the entire chocolate cake recipe with multiple steps, only to find out that you (the programmer) discovered a new process for making one component a day later. Unfortunately, you only speak and write Urdu, and the entire recipe and process hinge on the new component. Not only must you write the entire recipe again, but the translator (compiler) needs to perform the entire process again too.

Now picture the same scenario with an interpreted language. Because the interpreter is present the entire time your friend is baking, you can very easily tell the interpreter to ask your friend to stop for a moment. You can then rewrite the new portion of the procedure yourself, edit the affected components of the recipe, and tell the interpreter to resume the process with the newly implemented procedure. Even though the process is less efficient with the interpreter, editing the instructions (program) is much simpler.

However, imagine you had a time-tested recipe for something like apple pie. Your grandparents’ grandparents passed it down to you, and it hadn’t changed in over a century. The recipe is perfect, and to add to it would do it a disservice. In this scenario, a compiled language is king. Your friend (the computer) could confidently read and carry out the instructions (program) in the most efficient way possible until the end of time without the need for disruptive iterations.

In the real world, compiled languages are preferred for computing-intensive software that requires heavy resource usage or in a distributed system where optimal performance from the processor is a key factor. Interpreted languages are preferred for less computing-intensive applications where the CPU isn't a bottleneck, such as user interfaces. Another use case for interpreted languages was server-side development before the introduction of containers. Because the processor spent most of its time waiting for requests or replies from the database, sub-optimal performance from the processor wasn't a concern.

Get started with programming languages

Compiled and interpreted languages exist for different reasons. It's because of these innovations that modern applications can accomplish so much, and if it weren’t for the vacuum-tube programmers of computing’s early days, neither category would exist.

By now you should have a basic understanding of the distinctions between compiled and interpreted languages, but we've merely given you a broad introduction to this topic. For the next steps in your learning path, you may want to learn how these types of languages intersect, as well as delve into programming in each type. You may also want to read up on more complex topics like just-in-time compilation (JIT), bytecode compilation, and object code.

Want to get started now? As a language that can be considered both interpreted and compiled, Python might be a good place to begin. It's also arguably the most in-demand programming language today. To help you master Python, we've created the Python for Programmers learning path, which covers programming fundamentals, tips for writing cleaner code, data structures, and advanced topics like using modules.

Happy learning!

Continue learning about programming languages on Educative

- Functional programming vs OOP: Which paradigm to use

- Why learn Python? 5 advantages and disadvantages

- What's the best programming language to learn first?

Start a discussion

What programming language do you think is best to learn first? Was this article helpful? Let us know in the comments below!